2017年8月6日星期日

Microsoft SQL Server Integration Services: SSIS Naming conventions

Microsoft SQL Server Integration Services: SSIS Naming conventions: In 2006 Jamie Thomson came up with naming conventions for SSIS tasks and data flow components. These naming conventions make your packages...

2017年8月5日星期六

Analytical Method Development and Validation: A Concise Review

- *Corresponding Author:

- Ashish Chauhan

SMPIC, NIPER, Mohali

Punjab, India

E-mail: aashishchauhan26@gmail.com

Received Date: January 28, 2015; Accepted Date: February 14, 2015; Published Date: February 23, 2015

Citation: Chauhan A, harti Mittu B, Chauhan P (2015) Analytical Method Development and Validation: A Concise Review. J Anal Bioanal Tech 6: 233. doi: 10.4172/2155-9872.1000233

Copyright: ©2015 Chauhan A, et al. This is an open-access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited.

Visit for more related articles at Journal of Analytical & Bioanalytical Techniques

SMPIC, NIPER, Mohali

Punjab, India

E-mail: aashishchauhan26@gmail.com

Abstract

Analytical method development and validation are the continuous and inter-dependent task associated with the research and development, quality control and quality assurance departments. Analytical procedures play a critical role in equivalence and risk assessment, management. It helps in establishment of product-specific acceptance criteria and stability of results.Validation should demonstrate that the analytical procedure is suitable for its intented purpose. Design of experiment is a powerful tool for the method characterization and validation. Analytical professionals should be comfortable to use it to characterize and optimize the analytical method. An effective analytical method development and its validation can provide significant improvements in precision and a reduction in bias errors. It can further help to avoid costly and time consuming exercises.

Keywords

Validation; Stability; Precision; Accuracy; SOP

Introduction

Analytical method development

Analytical Chemistry is the branch of Science that uses advance technologies in determining the composition by analytical technique. We can achieve both qualitative as well as quantitative results. Analytical instruments play a major role in the process to achieve high quality and reliable analytical data. Thus everyone in the analytical laboratory should be concerned about the quality assurance of equipment.

Analytical method could be spectral, chromatographic, electrochemical, hyphenated or miscellaneous. Analytical methoddevelopment is the process of selecting an accurate assay procedure to determine the composition of a formulation. It is the process of proving that an analytical method is acceptable for use in laboratory to measure the concentration of subsequent samples Analytical methods should be used within GMP and GLP environments and must be developed using the protocols and acceptance criteria set out in the ICH guidelines Q2(R1). The prerequisite for method development are as follows [1-4]:

1. Qualified and calibrated instruments

2. Documented methods

3. Reliable reference standards

4. Qualified analysts

5. Sample selection and integrity

6. Change control

An analytical procedure is developed to test a defined characteristic of the substance against established acceptance criteria for that characteristic. In the development of a new analytical procedure, the choice of analytical instrumentation and methodology should be based on the intended purpose and scope of the analytical method. The important parameters that may be evaluated during method development are specificity, linearity, limits of detection (LOD) and quantitation limits (LOQ), range, accuracy and precision (Table 1). During early stages of method development, the robustness of methods should be evaluated because this characteristic ultimately helps to decide which method will be approved. Analytical procedures development are primarily based on a combination of mechanistic understanding of the basic methodology and prior experiences. Experimental data from early procedures can be used to guide further development.

| Parameters | Identification | Impurities QuantitativeLimit | Assay | |

|---|---|---|---|---|

| Accuracy | - | + | - | + |

| Precision | - | + | - | + |

| Specificity | + | + | + | + |

| Detection limit | - | - | + | - |

| Quantitation limit | - | + | - | - |

| Linearity | - | + | - | + |

| Range | - | + | - | + |

| Robustness | + | + | + | + |

Table 1: The parameters of an analytical procedure.

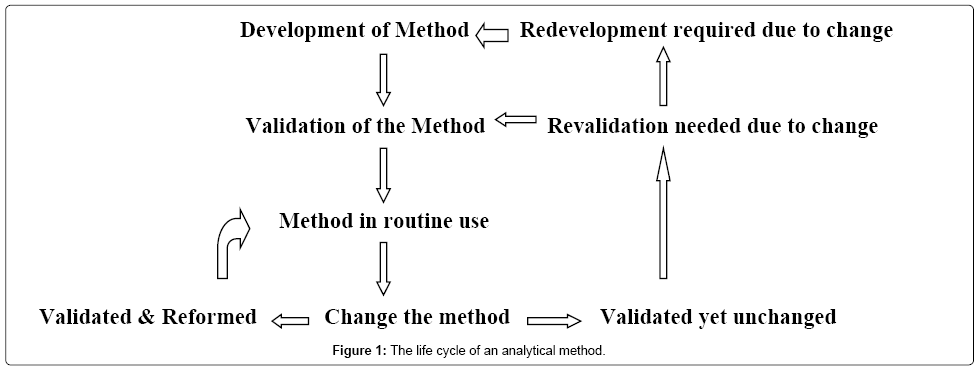

The life cycle of an analytical method is brief as shown in Figure 1. The common steps followed in the method development are as follows:

1. Standard analyte characterization

2. Method requirements

3. Literature search

4. Selecting the method

5. Instrumental setup and preliminary studies

6. Optimization of parameters

7. Documentation of analytical figure

8. Evaluation of the method development with the sample

9. Determination of percent recovery of the sample

10. Demonstration of quantitative sample analysis

The ability to provide accurate, reliable and consistent data is the motive of the analytical chemist. Method development procedures are complex, extended and expensive endeavors. An analytical method details the steps and techniques necessary to perform an analysis. This may include: preparation of samples, standards and reagents; use of apparatus; generation of the calibration curve, use of the formulae for the calculation etc. Analytical Method Development is required for [1-4]:

1. Herbal products and their potency

2. New process and reactions

3. New molecules development

4. Active ingredients (Macro analysis)

5. Residues (Micro analysis)

6. Impurity profiling

7. Component of interest in different proportion

8. Degradation studies

Need of analytical method development and validation

The need of validation of the analytical method development and validation emerged due to international competition, maintaining the standard of products in high commercial & market value and ethical reasons. Various International Regulatory Agencies have set the standard and fixed the protocol to match the reference for granting approval, authentication and registration. Some of the famous organizations governing the quality standards are:

1. United States Food and Drug Administration (US FDA)

2. Current Good Manufacturing Practice (cGMP) regulations

3. Good Laboratory Practice (GLP) regulations.

4. The Pharmaceutical Inspection Cooperation Scheme’s (PIC/S)

5. Pharmaceutical Inspection Cooperation Scheme (PIC/S)

6. The International Conference for Harmonization (ICH)

7. ISO/IEC 17025

8. World Health Organization (WHO)

When some changes are made in the validated nonstandard methods, the influence of such changes should be documented and a new validation should be carried out. If standard methods are available for a specific sample test, the most recent edition should be used. Validation includes specification of requirements, determination of method characteristics, a check that the requirements can be fulfilled by using the method, a statement on validity [5-8].

To fully understand the effect of changes in method parameters on an analytical procedure, adopt a systematic approach for method robustness study (design of experiments with method parameters) followed by an initial risk assessment and multivariate experiments. Such approaches allow us to understand parameter effects on method performance. Evaluation of a method’s performance may include analyses of samples obtained from in-process manufacturing stages to the finished product. The information obtained during these studies on the sources of method variation can help to assess the method’s performance.

Validation of the method

Data quality is assured by the combination of four components: analytical instrument qualification (AIQ); analytical method validation; system suitability tests and quality control checks. Validationof an analytical method is intended to demonstrate that it is suitable for its intended use. We generally validate the method under following conditions:

1. During method development

2. Checking the system suitability

3. Change of application, environment, analyst

4. While using after a prolonged period of time

5. Checking reliability and consistency

The type of method and analytical technique used will determine the nature and extent of the validation studies required. The most common methods for validation are identification, assay and impurities determination [5-8].

The validation report details the results of the validation study. Its purpose is to provide the information on the characteristics on the basis of which they were tested during the study, the results obtained and the interpretation of those results. Typical information in a validation report includes:

1. Validation protocol.

2. Analytical method

3. The validation parameters

4. The results

5. Interpretation of the results

6. Relevant validation information

7. Details of the reference materials

8. Details of batch number

9. Details of the equipment used for the study

10. References to the laboratory details

Typical validation parameters recommended by FDA, USP, and ICH are as follows:

1. Specificity

2. Linearity and Range

3. Precision

(A) Method precision (Repeatability)

(B) Intermediate precision (Ruggedness)

4. Accuracy

5. Solution stability

6. Limit of Detection (LOD)

7. Limit of Quantification (LOQ)

8. Robustness

Method validation is a vast area which includes many validation parameters with different approaches for different level of requirements based on intended use of analytical method. Validated method elucidates the unpredicted or unknown problem during the course of routine usage. Validated method has limited level of confidence. After method development it needs to be validated as per requirement that gives certain level of confidence for its intended use.

Criteria of Validation

The validation of an analytic method demonstrates the scientific soundness of the measurement or characterization. It is required to varying extents throughout the regulatory submission process. The validation practice demonstrates that an analytic method measures the correct substance, in the correct amount and in the appropriate range for the samples. It allows the analyst to understand the behavior of the method and to establish the performance limits of the method [9-11].

In order to perform method validation, the laboratory should follow a written standard operating procedure (SOP) that describes the process of conducting method validation. The laboratory should use qualified and calibrated instrumentation. There should be a welldeveloped and documented test method and an approved protocol prior to validation. The protocol is a systematic plan that describes which method performance parameters should be tested, how the parameters will be assessed with its acceptance criteria. Like in case of Pharmaceuticals, an API or drug product, placebos and reference standards are needed to perform the validation experiments.

Accuracy is the closeness of agreement between the values found. The value accepted as a conventional true value or the accepted reference value. Several methods of determining accuracy are available. It can be screened by the use of an analytical procedure to an analyte of known purity, by comparison of the results of the proposed analytical procedure with those of a second accepted procedure, the accuracy of which is stated and defined. It can also be inferred once precision, linearity and specificity have been established [3-9].

Precision of an analytical procedure expresses the closeness of agreement between a series of measurements obtained from multiple sampling of the same homogeneous sample under the prescribed conditions. It can be sub divided into repeatability, intermediate precision and reproducibility. The standard deviation, relative standard deviation like coefficient of variation and confidence interval should be reported for each type of precision investigated.

1. Repeatability should be assessed using a minimum of 9 determinations covering the specified range for the procedure by 3 replicates or 6 determinations at 100% of the test concentration.

2. Immediate precision depends upon the circumstances under which the procedure is intended to be used. The specific day, analyst performing, equipment are the random events that cast effect on the precision of the analytical procedure. It is not considered necessary to study these effects individually. The use of an experimental design should be encouraged.

3. Reproducibility is assessed by means of an inter-laboratory trial. Reproducibility should be considered in case of the standardization of an analytical procedure.

4. Specificity is the ability to assess the analyte for the presence of various components that may be present. It can be established by a number of approaches, depending on the intended purpose of the method. The ability of the method to assess the analyte of interest in a drug product is determined by a check for interference by placebo. Specificity can be assessed by measurement of the API in samples that are spiked with impurities or degradants. If API-related compounds are not available, drug can be stressed or force-degraded in order to produce degradation products. In chromatographic separations, apparent separation of degradants may be confirmed by peak purity determinations by photodiode array, mass purity determinations by mass spectroscopy (MS) or by confirming separation efficiency using alternate column chemistry. During forced degradation experiments, degradation is targeted at 5 to 20% degradation of the API, in order to avoid concerns about secondary degradation. Lack of specificity of an individual analytical procedure may be compensated by other supporting analytical procedures.

5. The detection limit of an individual analytical procedure is the lowest amount of analyte in a sample that can be detected. It can be determined visually, by signal to noise ratio, standard deviation of the response and the slope. Detection limit signal to noise approach can only be applied to analytical procedures which exhibit baseline noise. Comparing measured signals from samples with known concentrations of analyte with those of blank samples and establishing the minimum concentration at which the analyte can be reliably detected. A signalto- noise ratio between 3 or 2:1 is generally considered acceptable for estimating the detection limit. The detection limit (DL) may be expressed as: DL=3.3 σ/ S where, σ is the standard deviation of the response, S is the slope of the calibration curve. The slope S may be estimated from the calibration curve of the analyte. The estimate of σ may be carried out in a variety of ways, based on the standard deviation of the blank and the calibration curve [2-9]

6. The linearity of an analytical procedure is its ability to obtain test results that are directly proportional to the concentration of analyte in the sample. Test results should be evaluated by appropriate statistical methods, by calculation of a regression line like by the method of least squares. correlation coefficient, y-intercept, slope of the regression line and residual sum of squares for which a minimum of five concentrations are recommended.

7. The range of an analytical procedure is the interval between the upper and lower concentration of analyte in the sample for which it has been demonstrated that the analytical procedure has a suitable level of precision, accuracy and linearity.

8. Robustness is typically assessed by the effect of small changes in chromatographic methods on system suitability parameters such as peak retention, resolution and efficiency. Experimental factors that are typically varied during method robustness evaluations include: (i) age of standards and sample preparations (ii) sample analysis time (iii) variations to pH of mobile phase (iv) variation in mobile phase composition (v) analysis temperature (vi) flow rate (vii) column manufacturer (viii) type and use of filter against centrifugation. Robustness experiments are an ideal opportunity to utilize statistical design of experiments, providing data-driven method control.

The ICH guidance on validation distinguishes the types of methods according to the purpose of the method and lists suitable evaluation type. The ICH guidlines suggests detailed validation schemes relative to the purpose of the methods. It lists recommended data to report for each validation parameter. Acceptance criteria for validation must be based on the previous performances of the method, the product specifications and the phase of development.

As previously mentioned, the path to validation forms a continuum. It begins in the early phases of development as a set of informal experiments that establishes the soundness of the method for its intended purpose. It is expanded throughout the regulatory submission process into a fully-documented report that is required for commercial production. It is repeated whenever there is a significant change in instrumentation, method, specifications and process [1-11]

Issues and Challenges

For a method development and validation program to be successful, a holistic approach is recommended. A common challenge encountered during methods development and validation is that methods are typically developed by the research and development department whereas validation is typically the responsibility of a quality assurance and quality control. It’s important that all the groups work as one team. Various groups may be responsible for ensuring the suitability of the methods to support various phases and commercial manufacturing. The transfer of analytical methods from one group to another then becomes an important step for ensuring that the proper validation is in place to justify its intended use. Because the method will be run by several groups during its progress from development to validation but the method must be robust. A common weakness in development and validation of methods is that the methods are not robust enough. If robustness is not built into methods early in development then the results are likely to lack efficiency in quality testing and encounter lengthy and complicated validation process. It is achieved by conducting forced-degradation studies. The design and execution of these studies requires thorough knowledge of the product being tested as well as a good understanding of the analysis technique. New regulatory guidelines are being published that govern the expectations of regulatory agencies throughout the world for methods development and validation. There is need to meet current regulatory standards. From a simple method improvement to a complete redevelopment and subsequent implementation is tedious task. For this reason, one must be alert to current trends in regulatory guidelines and to adopt a proactive approach to changes that may affect development and validation programs. Finally, one of the key requirements for methods validation is that only well-characterized reference materials with proper documented purities should be used during method validation [1-15].

Conclusion

Analytical method development helps to understand the critical process parameters and to minimize their influence on accuracy and precision. Analytical methods should be used by following GMP and GLP guidelines and must be developed using the protocols and acceptance criteria set out in the ICH guidelines Q2(R1). Method validation helps to validate the analytical method for a range of concentrations so that the change in formulation or concentration do not require additional validation. Once the methods have been developed, qualified and validated the impact they have on out-ofspecification rates and process capability needs to be quantified and evaluated to determine its effectiveness for future use.

References

- (2000) International Conference on Harmonization (ICH) of Technical Requirements for Registration of Pharmaceuticals for Human Use, Topic Q7: Good Manufacturing Practices for Pharmaceutical Ingredients.

- Current Good Manufacturing Practices for finished Pharmaceuticals, 21 CFR, Parts 210 and 211, US Food and Drug Administration.

- European Commission (2001) Final Version of Annex 15 to the EU Guide toGood Manufacturing Practice: Qualification and validation: 4 1-10.

- McDowall RD (2005) Effective and Practical risk management options for computerized system validation, Quality Assurance Journal 9 (3): 196-227

- Bansal KS, Layloff T, Bush ED, Hamilton M, Hankinson EA, et al. (2004) Qualification of Analytical Instruments for Use in the Pharmaceutical Industry: a Scientific Approach. AAPS Pharm Sci Tech 5: 1-8.

- Bedson P, Sargent M (1996)The development and application of guidance on equipment qualification of analytical instruments.AccredQual. Assurance 1: 265-274.

- OonaMcPolin (2009) Validation of Analytical Methods for Pharmaceutical Analysis, Mourne Training Services, 14Burren Road, Warren point Co. Down BT34 3SA.

- FDA (2000) Guidance for Industry: Analytical Procedures and Method Validation, Chemistry, Manufacturing, and Controls Documentation, U.S. Department of Health and Human Services.

- International Conference on Harmonization (1994) Validation of analytical procedures: text and methodology Q2(R1).

- USP (2009) Chromatography System Suitability. United States Pharmacopeial Convention.

- Chan CC,Lam H, Lee YC, Zhang XM (2004) Analytical Method Validation and Instrument Performance Verification, Hoboken, John Wiley & Sons (Wiley Inter science),New Jersey.

- Robert W. Lee,Laurie Goldman The Central Role Of Analytic Method Development And Validation In Pharmaceutical Development. Life Science Connect 1-3

- Burdick RK, LeBlond D, Sandell D, Yang H. (2013) Statistical methods for validation of procedure accuracy and precision. PharmacopeialForum 39:(3)

- NethercoteP, Ermer J. (2013) Quality by design for analytical methods: implications for method validation and transfer. Pharm Technol 36(10): 74-79.

- Weitzel MLJ (2012)The estimation and use of measurement uncertainty for a drug substance test procedure validated according to USP 1225 Accreditation Quality Assurance 17(2): 139-146.

SQL SERVER – Performance Tuning – Part 1 of 2 – Getting Started and Configuration

SQL SERVER – Performance Tuning – Part 1 of 2 – Getting Started and Configuration

Performance tuning is always a complex subject whenever one has to deal with it. When I was beginning with SQL Server, this was the most difficult area for me. However, there is a saying that if one has to overcome their fear one has to face the fear first. So I did exactly this. I started to practice performance tuning.

Early in my career I often failed when I had to deal with performance tuning tasks. However, each failure taught me something. It took a quite a while and about 100+ various projects before I started to consider myself a guy who knows something about performance tuning.

Every performance tuning exercise gave me new experience. I firmly believe in “Practice makes perfect” when I am involved in performance tuning projects. There were times when I could figure out problems by just looking at few configurations and settings. When asked how I knew this so quickly my usual answer was “experience.”

Quite often I get requests to share my expertise in performance tuning. This is when I wish it were easier. If I had to go back and do one thing during my whole career of performance tuning, I would document every single performance tuning rule that I have come across in my life. Once everything is documented, the expertise of performance tuning can be measured, and the results of these rules can give additional confidence while doing performance tuning.

Performance tuning used to be the responsibility of the DBA and developers who built the code. However, recently I have seen in many organizations that there are dedicated experts who are responsible for performance tuning of the server. Often they have to manage multiple servers and heterogeneous RDBMS. The job is more stressful and more challenging than we know.

I constantly try to test out various aspects of performance tuning as well as all available tools which can help me select the optimal solution for performance tuning. I recently tested out DB Optimizer from Embarcadero and here is very short tutorial of how this tool can be used, as well few of the pros and cons.

Let us divide this article into the following subjects.

- Getting Started

- Analysis and Detection

- Tuning and Optimizing

Getting Started

In this section we will go over basic steps to install DB Optimizer XE2 Version 3.0. First of all go to DB-Optimizer-XE and download the trial version of the DB Optimizer. The installation is very quick and simple. The installation is pretty much selecting button NEXT … NEXT and Finish!

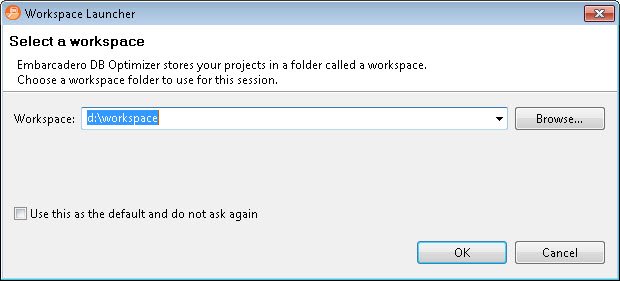

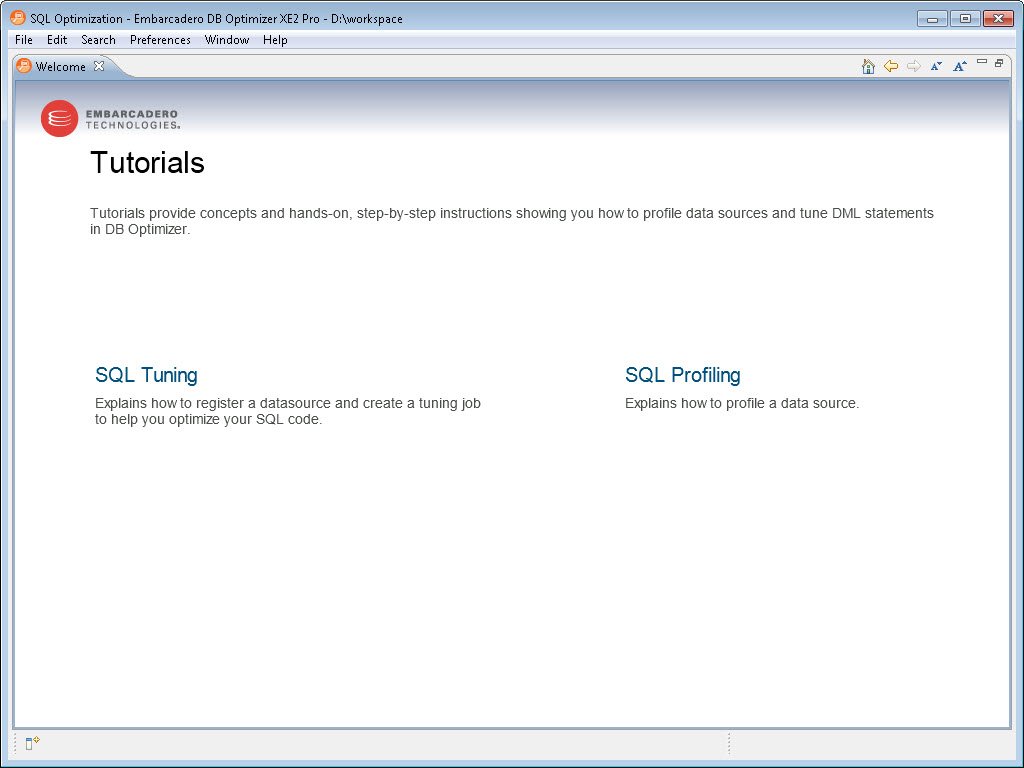

After you install and load the DB Optimizer, and right after the splash screen it will ask for the workspace where all the files of this optimization project will be saved. I think the most difficult part is over by now. From here the tool is very easy to use and self-explanatory. The very first screen has three sections: overview, tutorial and workbench.

I strongly suggest that if you are new to the tool then go over the tutorial. The tutorial is well organized and very detailed. It not only explains how one can do various performance tuning tasks but it often explains why they are done a certain way.

Once the tutorial is over or if you are an experienced user, head to the workbench directly. Workbench is the area where we will do various performance tuning related tasks.

Configuration

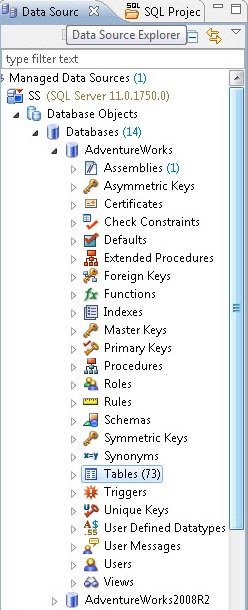

Here is one thing I have to point out: even though there is no wizard or step-by-step setup guide – the interface is very intuitive. For example, as soon as you start workbench, the very first screen is data source. Once data source is configured a simple right click on the data source is all one needs to work with performance tuning aspects.

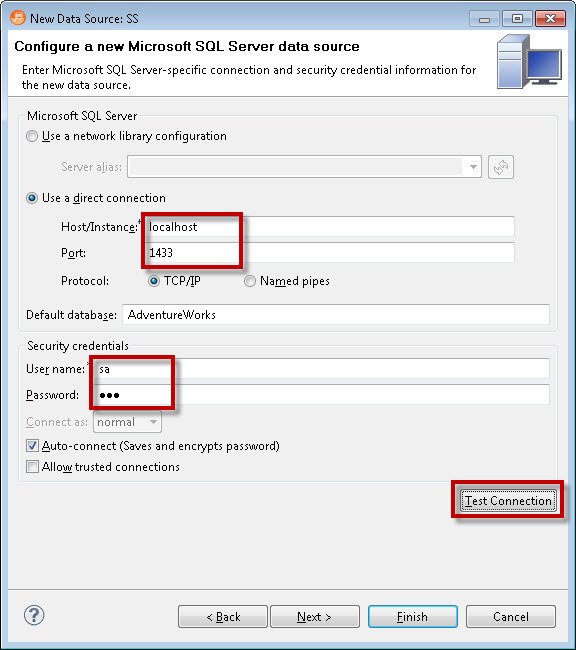

This tool lets you connect to multiple data sources. We will select SQL Server as the data source and give it an easy name so we can refer to it easily in the future. One specific thing worth noting is that while connecting to the data source, it lets you specify what kind of data source you are connecting to. Are you connecting to Development, Test, QA or Production? This is very essential so that we do not deploy changes to the incorrect server while we are performing various tasks with DB Optimizer.

The next step is to provide details of the connection string and check if the connection is successful by clicking on the Test Connection button at the bottom. If the host address along with username and password is correct, the connection will pop up with a “Connection was successful” message.

Click on Finish after the connection is successfully made to the data source. It will register the data source and bring up the same screen where you started.

Expand the data source which we have registered. Once you expand you will see every single object listed under the data source.

After configuring the data source, the next task is to configure the profile.

Profile configuration is a very easy process and we define where we will be storing various captured data in the database as well what data source will be captured.

Once the data profile is configured go ahead and click on Profile. I think we are now 100% done in configuring the setup. The next step is to analyze our profiled data.

In next blog post we will continue with the second part of Analysis, Detection, Tuning and Optimizing. Till than you can download and configure DB-Optimizer-XE.

This second part of Performance Tuning – Part 1 of 2 – Getting Started and Configuration. I suggest you read the first part before continuing on this second part.

Analysis and Detection

If you have noticed that configuration of the data source and profile is a very easy task and if you are familiar with the tool, this can be done in less than 2 minutes. However, while configuration is an important aspect, appropriate analysis of the data is more important since that is what leads us to appropriate results.

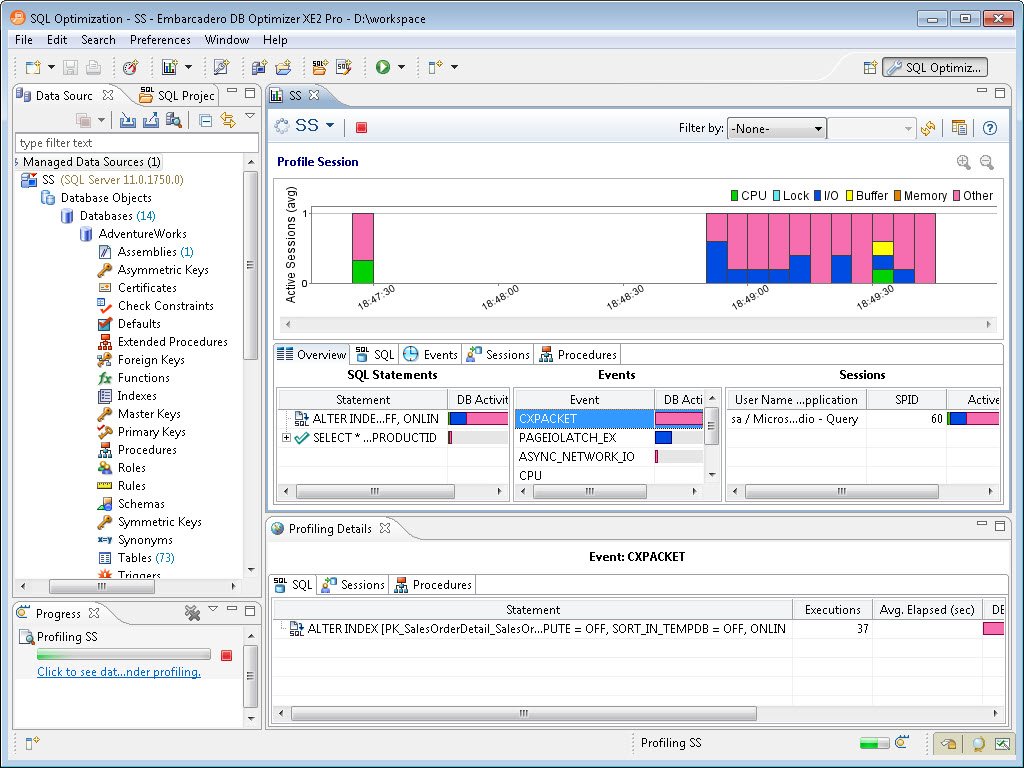

Once configuration is over, the screen shows the results of the profiling session. Now the screen will start returning information from various sources in detail. After a few minutes it will display the exact information regarding what is going on under the hood of SQL Server.

Let us see each of the sections in detail. The left most part will show the SQL statement. The SQL statement section is extremely important, once expanded it will start showing exactly what is going on with respect to the SQL Server load in the system.

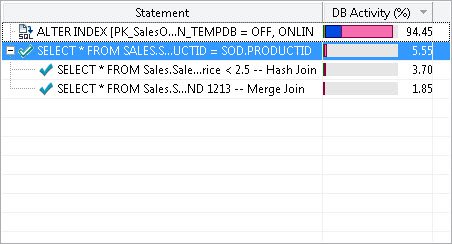

Identifying the Most Critical Query

A frequently-asked question by developers is: in real systems there are millions of queries executing in a given time period. If I have to begin tuning queries, where should I begin, and which query should I tune to give me maximum return with respect to the performance? Well, this is the precise question the SQL Statement area attempts to answer. It displays the queries in terms of the percentage of DB Activity. One can easily narrow down the query which is most expensive and focus on tuning the query.

Identifying Wait Statistics

This is another very popular aspect covered in the tool. If you know me, I am a big believer in SQL Wait Stats. When SQL Server is executing any task, and if for any reason it has to wait for resources to execute the task, this wait is recorded by SQL Server with the reason for the delay. Later on we can analyze these wait stats to understand the reason the task was delayed and maybe we can eliminate the wait for SQL Server. It is not always possible to remove the wait type 100%, but there are a few suggestions that can help. Here is the SQL Server Wait Stats ) series which I have written which explains all the major wait stats in detail.

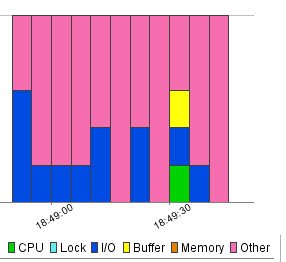

Profiling Active Sessions with Resources

Well, personally I found this section the most useful and insightful. The biggest challenge is to identify which resource is bottlenecked in SQL Server. It is quite possible that all the queries are running at their best. There is nothing left to tune with respect to the query, but still the performance is very slow. The reason can be that resources on the system are bottlenecked. The scarcity of resources is creating the performance degradation scenario. In this top horizontal section of the window it clearly maps what time exactly which resource was utilized. The same colors are also used in the SQL Statement, Events and Sessions section which help visually map queries, resources and events.

I believe once the query profiling is done, keeping resources in mind, the performance tuning task is much easier. Here are the steps a developer should be taking when they are trying to optimize queries using DB Optimizer:

- Identify slow query or offending query

- Identify the resource bottleneck using active sessions and events

- Tune the offending query

It is equally important to tune the offending query as much as it is to identify the offending query. In the next section we will see how DBOptimizer can help optimize the queries in a few clicks.

Tuning and Optimizing

When we identify the offending query our next intention is to optimize the offending query. To tune any query we need to understand all the details surrounding to the query. What impressed me the most about this tool was that it’s instant database-level analysis. Once we select the query to tune we can also see various deep down profiling details for individual queries as well.

First go to SQL statement and click on SQL Text. It will give the exact statement which ran, with all the parameters. The next tabs explain events, sessions and procedures which were associated with SQL Statement.

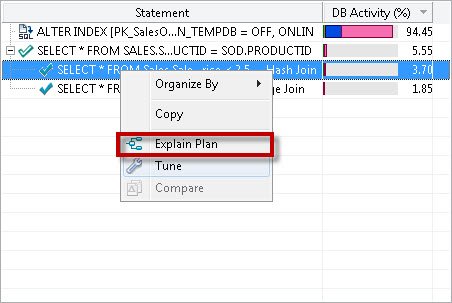

Once the profiling details associated with any query is understood the next important task is to tune the query and understand the execution plan. In the SQL Statement query if you right click there are two options visible, 1) Explain Plan and 2) Tune

Explain Plan

You can see that now we are entering into specific details related to the query.

In this section you can see very clear tabular formatted explanations of the execution plan. There is various information associated with the execution plan. Personally I prefer to see the execution plan in XML format as it explains various details. However, when in a hurry it is not always possible to spend time on XML. There are cases when any organization does not have time and bandwidth to do this.

The Execution Plan section is not only important for understanding queries but it is a great learning tool as well. I personally spend quite a lot of time on the execution plan. I spend time even on good queries as it is important to know what made the query so good. I think the Explain Plan can aid in learning this technique. Pay attention to I/O Cost, CPU Cost, Row size, Rows and Execution count.

Tuning Execution Plan

I think this is the most interesting part of the DB Optimizer tool. It is called TUNE! I think in one simple word it says what it does and when I saw this for the first time, I just felt like clicking on it. There are cases when a developer or DBA does not want to dig deeper in the scenario, or there are cases when they just want to try alternate scenarios as well. I believe this is a good place to start. Just hit Tune and let DBOptimizer suggest the best plan.

Though I was intrigued to hit Tune, I was bit wary to click it as I was not sure if the tool would then execute any T-SQL statements or tune script against the database. I am the guy who trusts anybody and everybody, but even then, I always like to at least know what is going to happen when it is about my most critical database. Fortunately, when I clicked on Tune, it showed me an overview screen. Where there were two options provided: 1) Generate Cases and 2) Perform Detail Analysis.

This was a very clean and easy to understand interface. The part I liked was generating cases. Often when I am developing T-SQL I want to try out various options but there is always a deadline to finish the project, and it makes sense to spend a limited amount of the time on any query as Return of Time Invested is very important as well. I think this tool can automate this mundane task and help figure out what the optimal solution is.

Clicking on the analysis tab also provides various index options. Additionally, it provides the cost for each index so you know which will be most efficient for a specific query.

If you click on Create Index it also generates the index for you, which you can apply to your database. I think there are many other features which we can discuss here, if I had the time. Additionally, please note that creating an index may or may not be the optimal solution. It is quite possible that a query re-write may solve your problem or even dropping unused indexes is the solution. Anyway, this tool gives lots of suggestions along with their impact, this makes decisions much easier.

Caution

I have always believed in the philosophy that there is no replacement for the human brain. No matter the advancements in artificial intelligence, there are places and cases when the human brain makes better decisions than any machine or tool. Though I have found DB Optimizer very well balanced and organized, I will also watch its decisions. It is great tool which so far has proved itself, but just like any other tool, it should be used with human intelligence. Just like Missing Index DMV gives plenty of Indexes to create but the DBA usually selects the most useful index and creates only those which are necessary. In the same way, there will many solutions this tool will suggest along with their impact. It is our responsibility to select the most powerful solution, keeping overall server health in focus.

Top 5 things I like the most

There are quite a few things which I really liked it but I decided to list the top 5 here to keep it sharp and short.

- Clear and easy to navigate interface

- Index analysis is fairly accurate and very informative

- Execution plan layout and arrangement is very well organized

- Painless, simple installation

- Well documented help and community support

Top 3 things for wish list for next version

Nobody is perfect. After using this tool for a long time, I have a wish list for this product. I am listing the top 3 items from this list.

- Further explanation of the wait stats

- Do not generate case – currently it generates various cases, there are times when I do not want cases to generate. I think this feature is a bit over-ambitious. Basic users who this tool targets may never need it

- Additional reference materials for the execution plans

Summary

Overall, DB-Optimizer-XE is indeed a performance tuning flagship product from Embarcadero. As I said earlier, the right tool can make life easier for experts as well remove all the uncertainty from a performance tuning project. I also found this product extremely easy to use, with a very clear user interface and even some fun options (like Tune). I think anyone who is interested in performance tuning should at least give DB Optimizer a try to see if they like it.

DB-Optimizer-XE has a lot of great features, but it’s not perfect. Keep in mind that you have to be smarter than the tools you use, so you can’t rely on one program alone to solve all your problems. However, with that said, I highly recommend this program to anyone who is facing performance tuning now and in the future.

Reference: Pinal Dave (https://blog.sqlauthority.com)

订阅:

评论 (Atom)